Usability Testing Questions: 7 Rules & 25 Examples

Maybe you just launched an app. Or maybe you are looking to modify your website for the better. Whatever the case, the ability for new users to intuitively navigate around your software is crucial to a successful product. This is where user experience testing comes in–even before your product is shipped to production, knowing specifically where and what can be improved can provide clarity and boost future sales. It can save you thousands of hours and dollars on problems that would otherwise be costly to solve. According to Uxeria, 88% of online customers do not return to websites that are not user-friendly, and 70% of online businesses fail because of bad usability.

The two most effective UX testing methods are to ask your users’ questions during a moderated usability testing study, which directly interviews users, or an unmoderated usability testing study, which collects video-based feedback. PlaybookUX offers both of these services based on what you want. But within UX testing studies, how can you know which types of questions to ask your consumers that would offer the most feedback to improve product usability? Let’s take a look at the seven rules for effective UX testing.

7 rules of asking usability testing questions

1. Ask qualifying questions

At the end of the day, UX testing is all about qualitative testing, or studies centered around generating data through direct observations. It answers the “why” and the “how”–two types of questions that will give you descriptive information, which you can then use to generate solutions. With more descriptive information, you can reach more detailed conclusions about how to address the problems you are trying to fix.

2. Don’t ask leading questions

Leading your participants in a certain direction does the opposite of what you want to accomplish. While it may be tempting to influence your users’ answers, you ultimately want the truth. For example, if you were to ask, “Why were you having difficulty navigating to this site?”, participants would be primed and biased to believe that it was a difficult task, even if it might have been incredibly easy.

Additionally, the participant could have looked to be having a hard time, but they also might have been exploring different areas of the website or thinking about what to do next–their actions may not accurately reflect their actual thought processes. Instead, take a step back and let the participants dictate what they were really thinking.

3. Ask open-ended usability questions

Open-ended questions require explanations, which give you insight into your participants’ thought processes as they used your product. A simple “yes” or “no” question gives little to no clarification as to why your user had a certain impression or difficulty. The more information, the better. Not only are open-ended usability questions useful for probing, but they can also make your participants feel more comfortable with sharing their thoughts as the study goes on. Once they are at ease with the study environment, the participants would then be more likely to be more open, which would generate more information.

4. Don’t ask for solutions

Although asking for solutions sounds like the quick fix to your product’s problems, the issue with that is that participants can have difficulty articulating what exactly was confusing. Instead of asking big questions, like a potential solution, your responsibility is to ask smaller questions that help participants digest their thoughts easier. By being specific, you will not only make your participants’ lives easier, but also yours. When it comes time to analyze your data, finding piece by piece answers to your solution better formulates your game plan rather than a vague generalization.

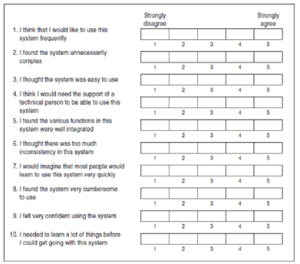

5. Ask System Usability Scale (SUS) questions

System Usability Scale (SUS) questions are usually asked as post-interview questions. They allow for reflection and have since become an industry standard due to their simplicity and reliability. They are particularly useful when comparing between two different applications, and since they are easy to administer, SUS questions are basically universal across technological platforms. SUS questions are also useful usability metrics to look at to track improvement of your product over time.

These questions ask participants a series of statements and tell them to rate each on a scale of 1-5. An example of a sample statement would be: “I found the system easy to use.” The participants would then rate how easy the system was to use, with 1 being strongly agree and 5 being strongly disagree.

6. Ask Single Ease (SEQ) questions

You’ve definitely seen this type of Likert scale question before. SEQ have participants rate on a scale of 1-7 how easy (1) or difficult (7) a task was to complete. Similar to SUS questions, SEQ helps with post-study analysis. It would look something like this:

While there is the concern that each user would respond differently (for example, one user could rate everything between 6-7 and another between 1-5), these differences do actually average out amongst all users. SEQ’s simplicity is one of its main selling points–not only is it easy to extrapolate constructive data from SEQ’s, but according to MeasuringU, it performs just as well as more difficult questionnaires, like the Usability Magnitude Estimation.

7. Ask Task Completion questions

These questions ask users if they successfully completed a task that you asked them to do, with the option of selecting “Yes,” “No,” and “I don’t know.” This has users recall their own behavior from the actual task. It highlights whether there is a gap between the participants’ perception and actual execution are in accordance with each other. After all, if a participant believes they finished a task when they actually did not, then there is a major usability problem to be addressed there. The one downside to this method is that these answers are self-reported, which can lead to greater variability depending on the user. It is important to note that some may find it easier to directly observe whether participants completed a task or not. This can be done through a moderated test, which requires a moderator–usually, you–to facilitate the study, or an unmoderated test, which would take traceable records to measure effectiveness, such as which buttons were clicked or which URLs were visited. However, these types of recording make determining task success much more difficult. If you are looking for a simple, albeit somewhat biased, survey, task completion questions are the way to go. Nevertheless, if you would prefer to monitor whether participants completed certain tasks or not, then moderated or unmoderated tests would be preferable.

If you are now wondering when to use which type of question, try thinking about when you would gather the most information from your participants. Usually, this would be after the participants have already completed the study; however, it is possible that you want to gauge audience reactions that do not require completion of a specific task, such as the case of looking at website aesthetics. Ask yourself: will my questions help with post or pre-study analysis? In addition, SUS, SEQ, and task completion questions all fall under quantitative data, while open-ended questions are qualitative. To have an effective study, both types of questions should be mixed together to provide the most detailed interpretation of data. After all, numbers by themselves, or words by themselves, lack the additional information that other types of data can more effectively and efficiently cover.

25 usability testing questions to ask

Now that we’ve covered seven ground rules, let’s look at 25 examples of usability testing questions you can ask for your UX testing experience.

Phase 1: User qualifying (screener) questions:

The population you use for your study can be divided into two types: general and specific. A general population is one pulled from a randomized pool of people spanning different demographics, such as age, gender, etc, whereas a specific population is comprised of those with common characteristics. Screener questions are mostly self-identifying questions. They are handy for identifying specific populations during the pre-test phase in order to filter and recruit the right types of people for your studies. For example, perhaps your target audience is someone of the upper-middle class who is in the advertising industry. Instead of drawing from a group of participants who may not fulfill these conditions, screener questions narrow your participants down to only those from categories that you specify. Here are some examples:

- What is your age?

- What is your ethnicity?

- What is your annual household income?

- What is your current work status?

- Which industry do you work in?

- When was the last time you used this website/app, and how often do you use it?

Phase 2: User Testing questions

Now that you have decided on the types of people your study will recruit, the next phase tackles with user testing questions. User testing questions dive into how users interact with your software, whether your software be a website or an app. This phase tackles with what your study’s purpose is actually for: to find the answers to your problems.

Website usability testing questions

One type of user testing question is website usability questions. These questions look specifically at how easily visitors can navigate your website to look at what you have to offer, such as a physical product. As previously mentioned, people rarely return to websites that are not user-friendly–you want your website to be as intuitive as possible. This type of question helps pinpoint and understand the problems your users encounter while browsing through your software, particularly in terms of website layout.

- Find Product X. Speak your thoughts out loud.

- How did you find this task? (1=very difficult, 5=very easy)

- How would you improve the process of finding Product X?

Web/mobile app usability testing questions

Similar to website usability questions, web/mobile app usability testing questions target the navigability of your app. However, instead of websites, the focus is on web/mobile apps. Web/mobile app usability testing questions are open-ended questions that help uncover the thought processes of your participants as they explore your app.

- What parts of the web/mobile app did you like the most? Why?

- What parts of the web/mobile app did you use the least? Why?

- What did you think of the interface?

- What do you think about the way features and information were presented?

- Why will you keep using this web/mobile app? Why will you not?

Concept usability testing questions

Concept testing questions are great if you are trying to test out a concept, whether it be adding a new feature or moving into a new market. These gauge consumer reactions before you decide to implement your concept, which would save you time, money, and energy in the long run. They can also help generate a solution to problems your users are currently facing.

- How frequently, if at all, would you use this feature?

- How would you improve this feature?

- What would make you want to use this feature? What would make you not want to use it?

- What are your initial thoughts on this concept?

Other usability testing questions

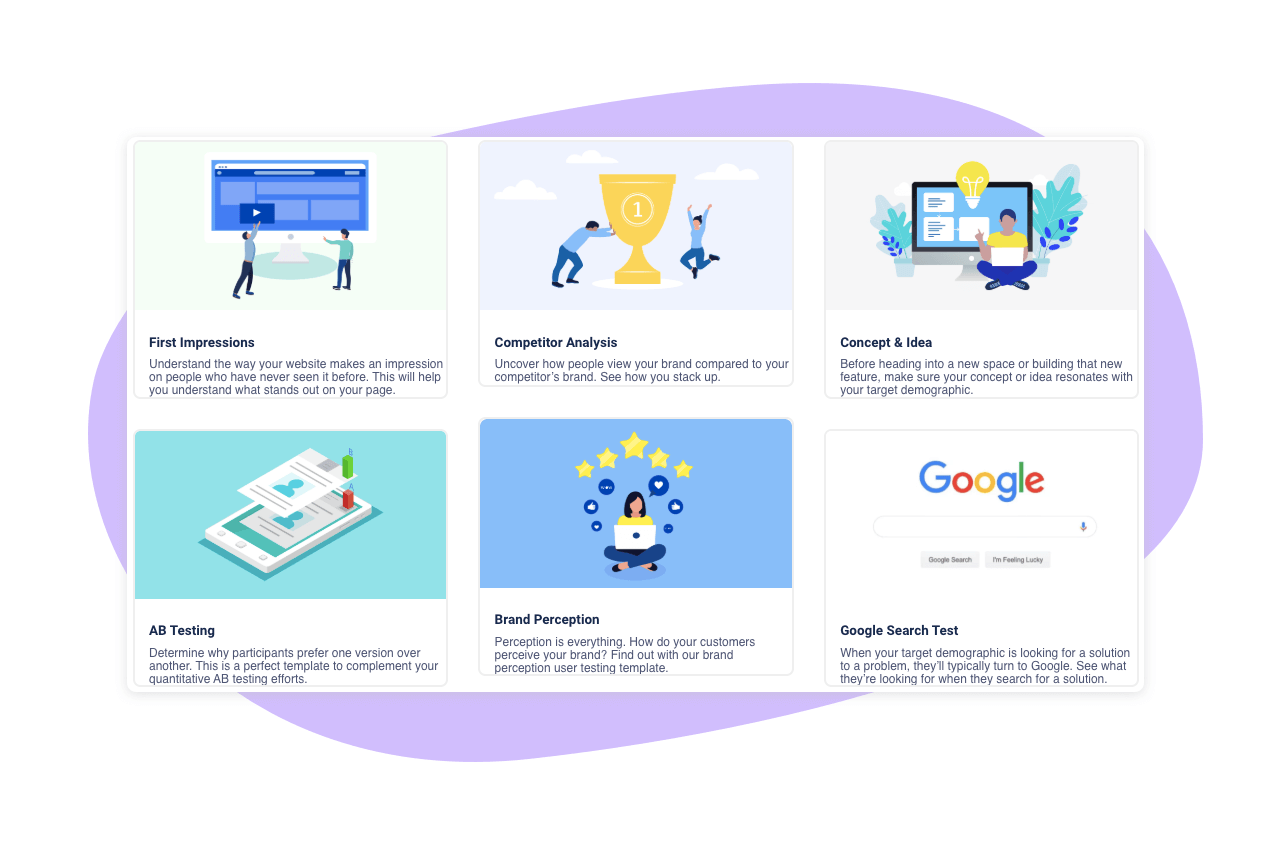

Other usability testing questions include those for first impressions of your site, competitor analysis, logo, brand perception, to name a few. These are easily accessible through PlaybookUX’s templates.

Each template concentrates on a specific issue you want to address, whether it be based on how your participants perceive your website or how your brand fares in comparison to others. Examples of these questions, spread over a range of templates, include:

- At first glance, what is the purpose of this website?

- Did you successfully find what you were looking for, and if not, what were you expecting?

- Do you need more information to decide to purchase this product or not?

- Do you trust Company X? Do you trust Company Y?

- What are three words you would use to describe Company X? What are three words you would use to describe Company X?

- Is this logo memorable? Is it trustworthy?

- Does this name remind you of any existing brands?

Depending on the company organizing your study, you can also decide whether to conduct it in-person or remotely. These were briefly mentioned before as moderated and unmoderated studies. Both have their own pros and cons. While remote testing is more flexible for participants, there is a greater risk to experience technical difficulties. On the other hand, you have your participants’ complete attention in in-person research, but the logistics of time and transport can be frustrating to deal with. At PlaybookUX, we offer both options–you can pick which works best for you.

Needless to say, you do not need to use each and every type of usability testing question mentioned here to conduct an effective user testing study. Instead, pick which questions would best suit what you want to accomplish. At the end of the day, it takes time and practice to nail down which usability testing questions are best for your study, especially since each type of question varies depending on the scenario. Hopefully, this guide pointed you in the right direction for your dive into user testing.

Speak to high quality people